Network engineers like redundancy. It’s not that we just want double of everything – we want the networks we design and manage to be super fast, super smart, and super resilient. In the LAN and in the data center we’ve been logically joining network switches using technologies such as Cisco StackWise, the Virtual Switching System and Virtual Port Channels with fabric extenders in order to consolidate control and data plane activities and provide greater fault tolerance, easier management and multichassis etherchannel for path redundancy. These are great benefits, but they can be reaped only by proper design. Otherwise, an engineer may introduce more risk into the network rather than make it more resilient.

We can stack fixed form factor switches such as the Catalyst 2960X, 3750X and 3850X to unify the control planes of all the stack members. An end-host connects to two separate devices in the stack which appear as one logical switch. This provides an etherchannel link spanning multiple pieces of hardware. Therefore, StackWise uses a shared control plane design to provide a switch engine redundancy and link diversity for the end host. This is commonly used in IDFs for access layer port density but also in building MDFs to dual-home downstream switches or servers.

Similarly, VSS merges the control plane of the 4500 chassis switches as well as the 4500X fixed form factor switches. The 4500 chassis switch already has dual supervisors and multiple power supplies, so the addition of VSS to logically join two of these large chassis switches provides some serious fault tolerance. To an extent, VSS on the 4500X platform works like StackWise with the active switch managing both devices. These platforms lend themselves to be used in the distribution layer or in a collapsed core.

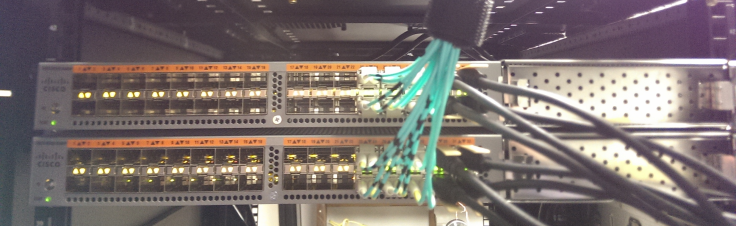

vPCs don’t by themselves merge the control planes of Nexus switches, but when used with Nexus fabric extenders, a network engineer can consolidate the control plane of all top of rack switches in a row or entire data center in one single device (or pair of devices).

The heart of what these technologies do is centralize the control plane. The benefit of increased hardware and link fault tolerance is very clear, but the drawbacks are often overlooked.

When consolidating the control plane of multiple switches onto one switch, the switch engine will always be on board some piece of hardware that’s also forwarding traffic. In the case of the 4500 chassis switches, the supervisor engines take up a couple slots in the chassis, but in the case of all the other switches, the switch engine is itself also a fixed switch. This means that a failure will likely result in the redundant links to many end-hosts going down. This is the whole point of link diversity, but it presupposes a correct overall design.

Consolidating the control plane can increase the failure domain.

This sort of fate sharing can be dangerous in the LAN and data center core. The design requires significantly more planning to actually produce the resiliency a network engineer desires. For example, are all end-hosts or downstream switches dual-homed? If not, a switch stack or VSS won’t help. Are the pair of Nexus 5548 parent switches each powered by the same circuit? Since FEXs send all their traffic to the parent switch for forwarding and policy decisions, the loss of the parent switches due to a power failure would stop the data plane for every single FEX associated with them.

The real key is to allow traffic in the data plane to continue in the event of a control plane failure. Today, vendors are investigating the disaggregation of the networking operating system from the underlying hardware. This would consolidate the control plane on one device (or HA pair) that doesn’t pass traffic itself. In the event of a failure of the primary and failover controllers, the data plane would remain mostly unaffected while network engineers repair the problem. There are still questions as to how MAC learning and link layer protocols such as LLDP would work, but this approach completely changes the dynamic of fate sharing in the LAN and data center. Proper planning and design is still necessary, but the technology would provide out of the box fault tolerance engineers want in their networks.

Using the latest version of StackWise (Stackwise-480), VSS, and Nexus fabric extenders makes sense in building IDFs and data center racks in order to provide cheaper and easy-to-manage port density, but when designing large building, campus and data center cores, consider if there really is a significant increase to network resiliency. Maybe the risk isn’t really worth the benefit, or maybe the design itself undermines the technology. Until we move to a disaggregated switch design with a pair of intelligent controllers managing dumb hardware all over campus, engineers should be careful not to introduce more risk into their networks rather than make them more resilient.

Thanks for posting this. I am rolling out my first Nexus’ so I used your guide and started browsing around. I came to this exact conclusion last month when Cisco released “Patch hell June” where there were nearly 60 vulnerabilities released. We had to patch the core and during the reboots, I documented ever outage so I could introduce more redundancy. In many cases, it will be breaking apart my switch stacks so that one reboot will not take down both sets of ports.

In any case, I concur with your conclusions, just wish I would have found this a couple of years ago… it may have influenced my design decisions for the better.

LikeLike

Network administration is not an easy job. That’s why redundancy is a best practice to keep the network in good shape.

LikeLike